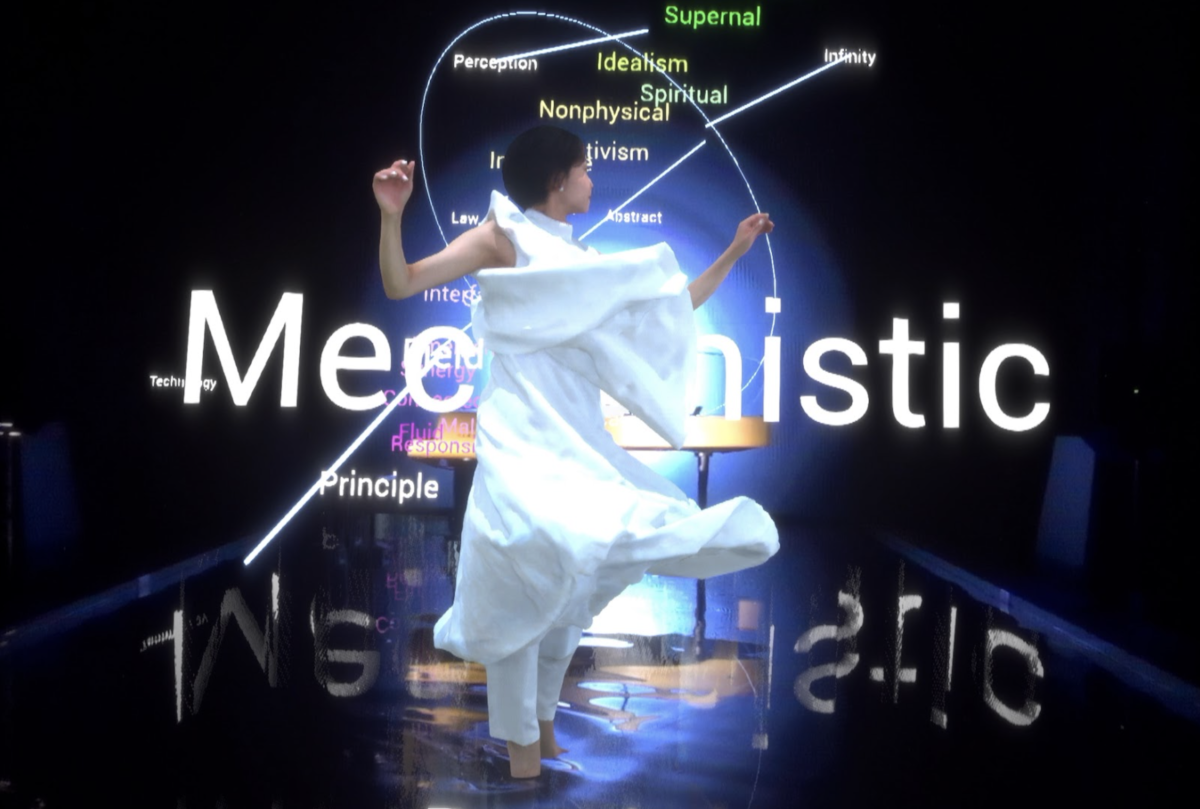

“Syn : Unfolded Horizon of Bodily Senses” by Rhizomatiks × ELEVENPLAY was presented from Oct. 6th – Nov. 12th, 2023 to commemorate the opening of the TOKYO NODE exhibition space atop the Toranomon Hills Station Tower.

Read on for a behind the scenes look at the making of this latest collaboration between Rhizomatiks (led by Daito Manabe and Motoi Ishibashi) and ELEVENPLAY (led by MIKIKO).

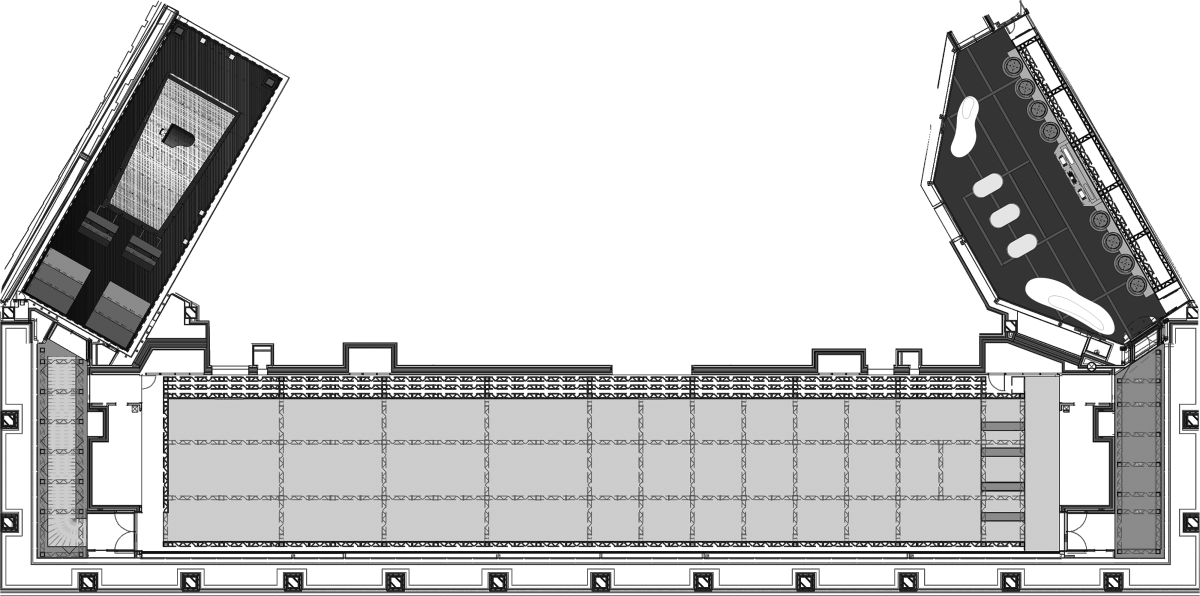

<Zoning>

The performance was an immersive theater experience, spanning three galleries for a total of nearly 1,500m² of floor space. Instead of being seated in chairs, audiences moved through these galleries as active participants and witnesses to the unfolding spectacle. To this end, even the passageways connecting the galleries were important to creating the effect of a cohesive world.

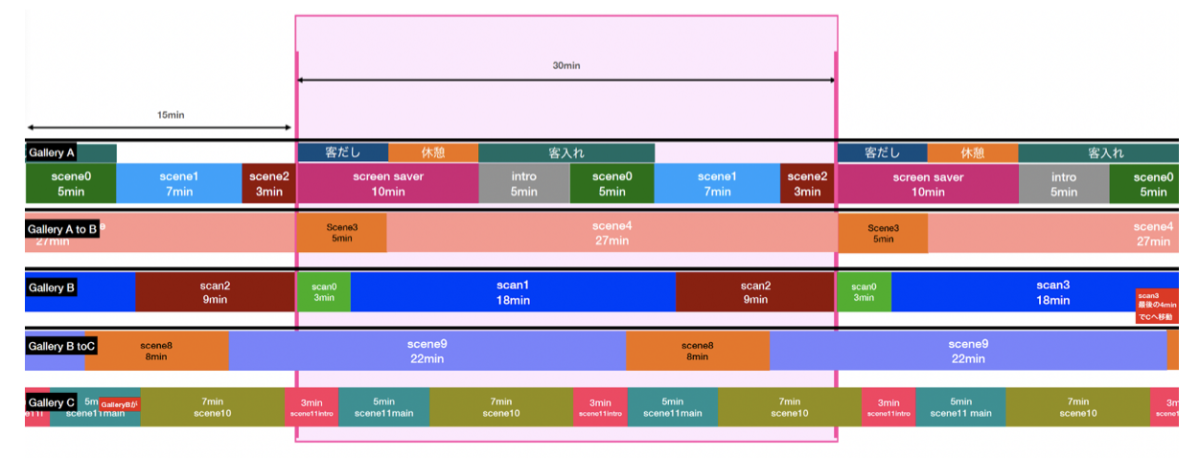

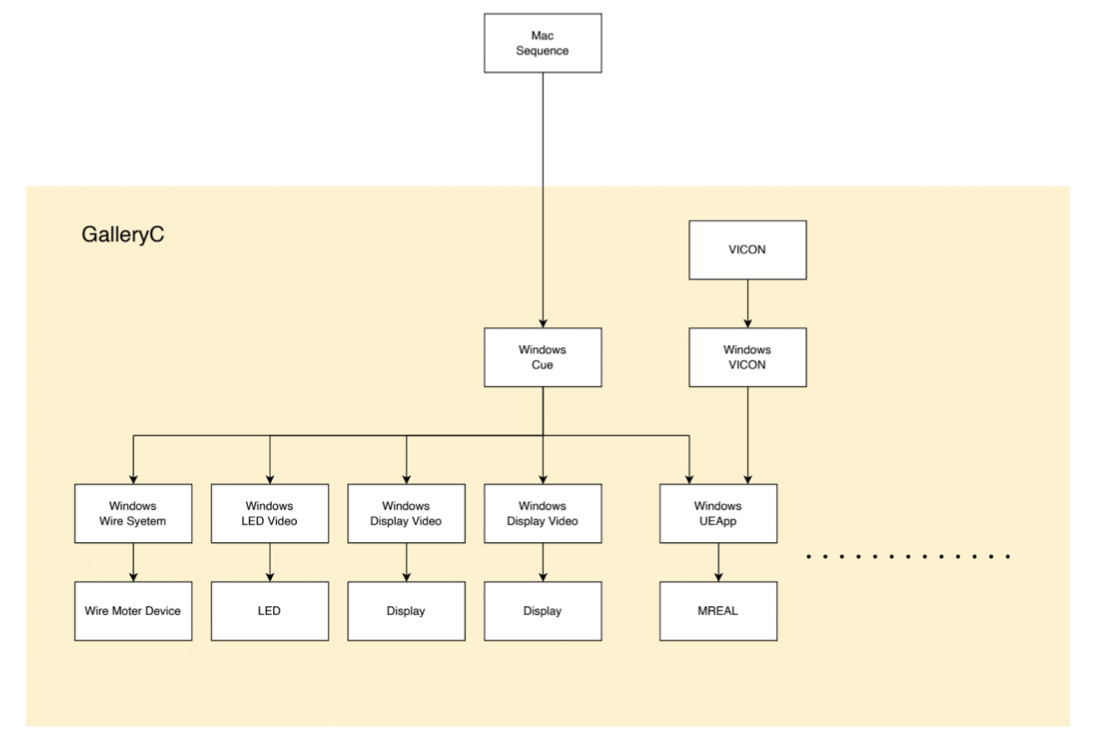

<Sequence>

Each performance lasted approximately 70 minutes from start to finish through the three galleries. At 30 minute intervals, a new group of audience members began their journey in Gallery A. Two groups would converge at a reflexive turning point in Gallery B, experiencing the same performance segment, albeit separated on both sides of a large, mobile wall. Meticulous sequencing was thus required in order to accommodate the total of 17 performances each day (see chart below).

◉GALLERY A

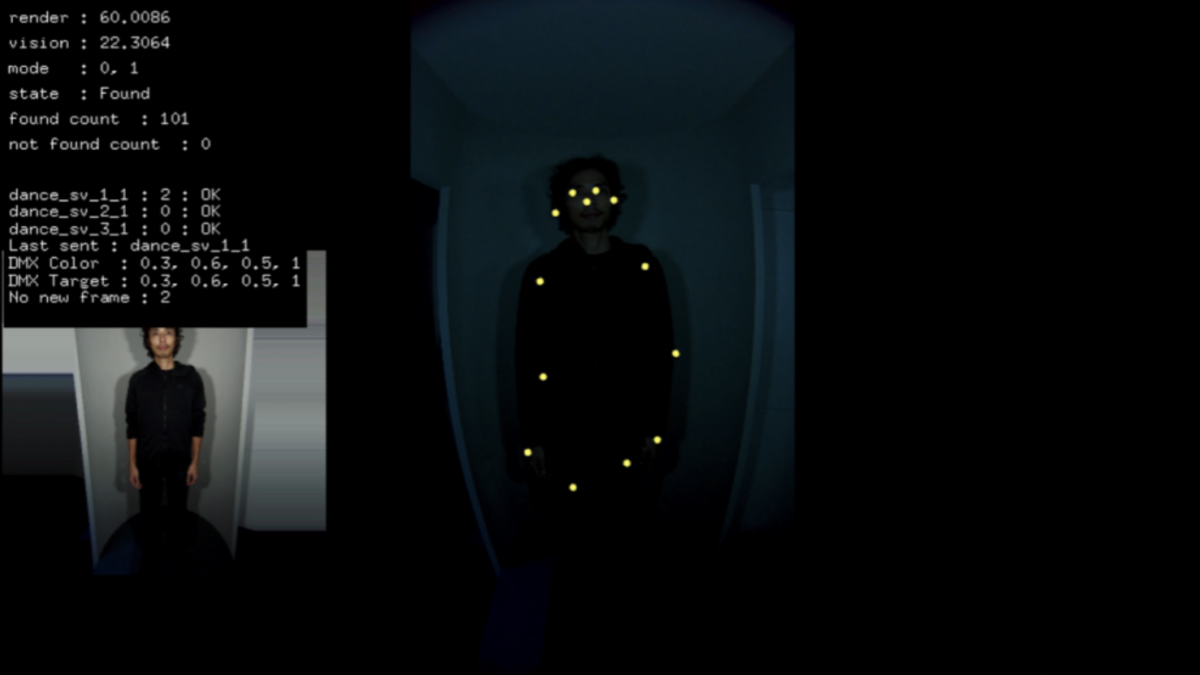

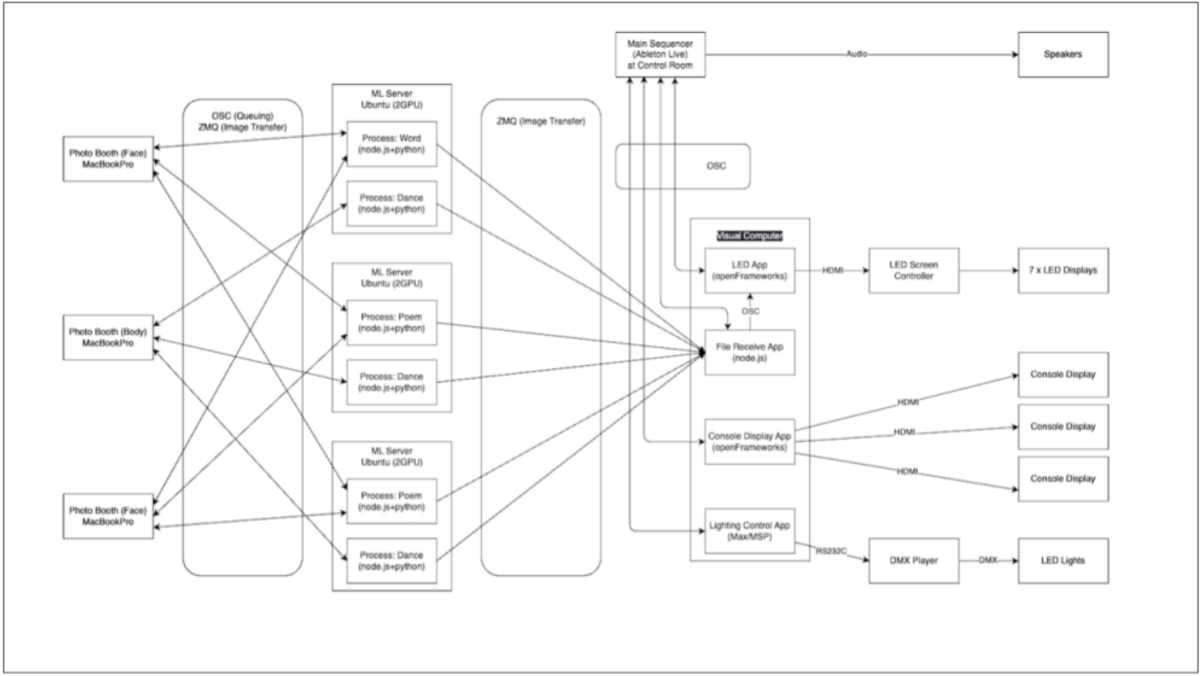

<AI Installation>

photo by Muryo Homma

Three photo booths were set up in Gallery A. When audience members entered the booth, camera image analysis automatically recognized their face and took a photo. AI then dynamically transformed these images into videos, making it appear that audience members were lip syncing to the music and dancing along to the choreography of the ELEVENPLAY dancers. These images were displayed with realtime effects as part of the closing video segment on the venue’s LED screen, thereby making the audience members a part of the performance.

(Photo booth analyzer view)

System diagram

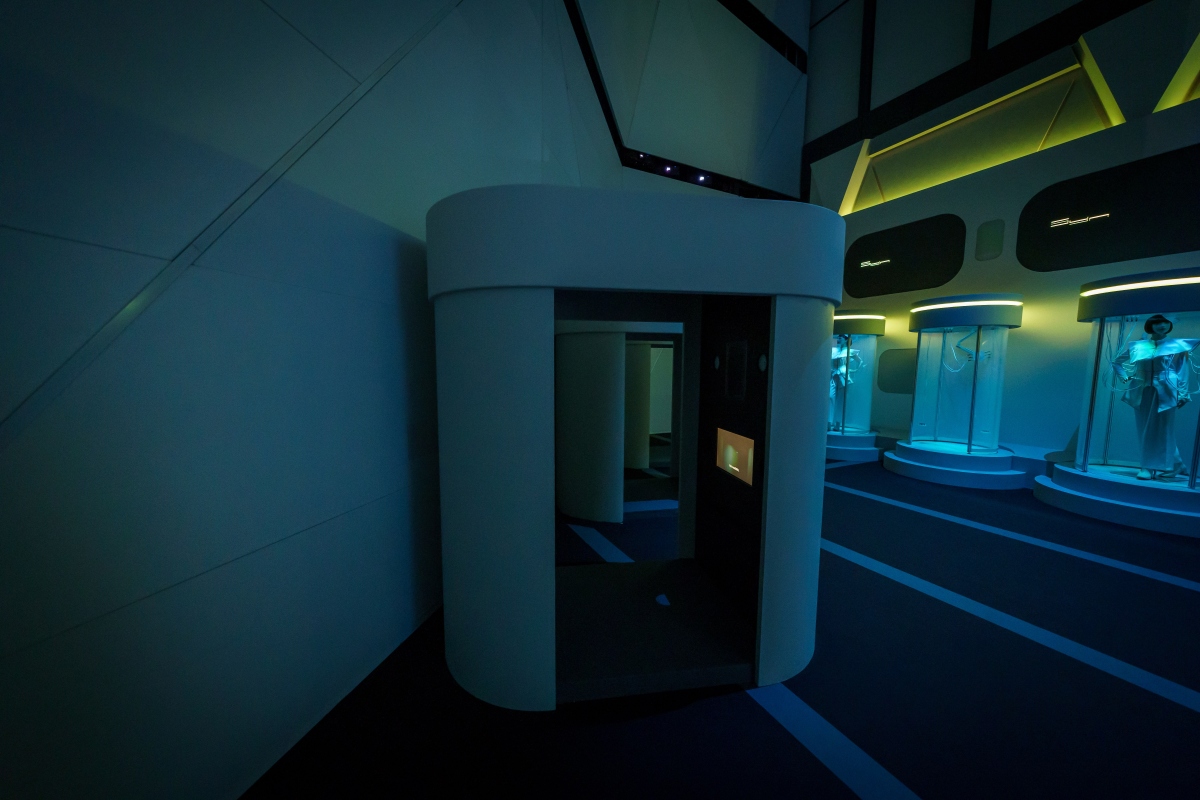

◉GALLERY A to B

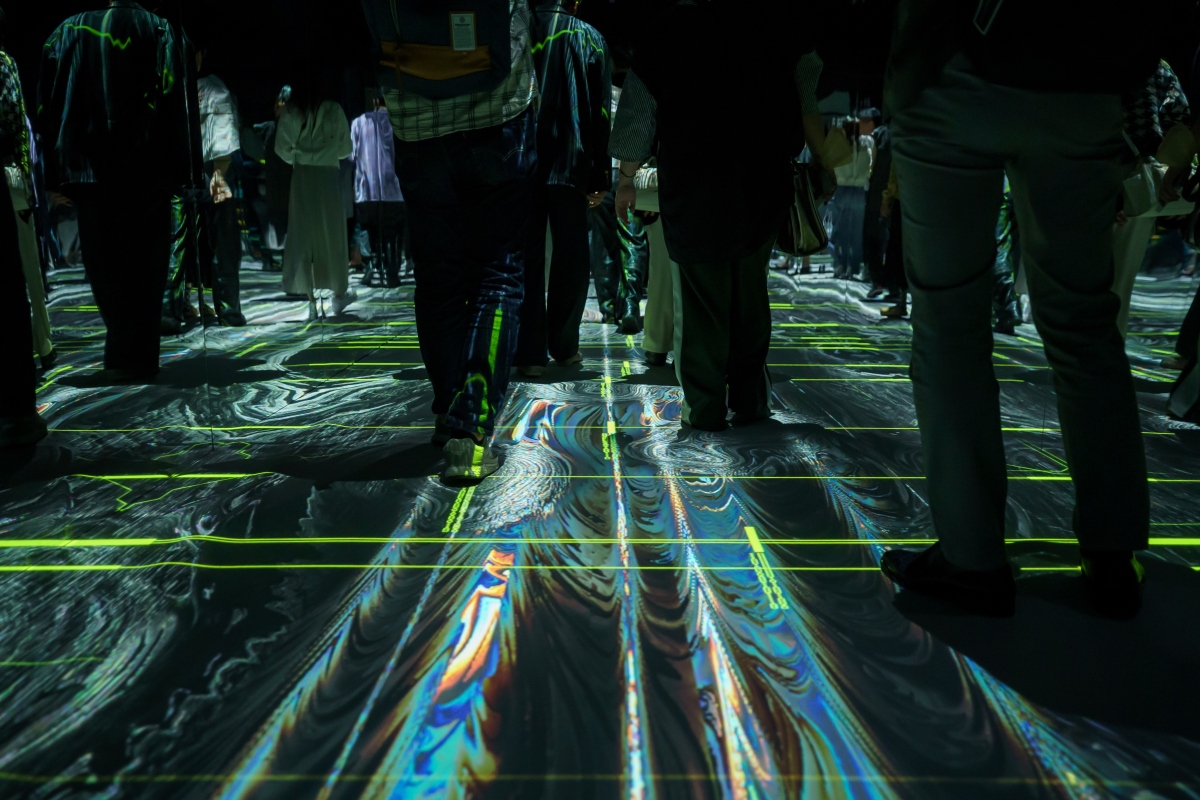

photo by Muryo Homma

Video imagery was projected on the entire floor of the passageway connecting galleries A and B. The video imagery responded interactively as patrons moved through the passageway.

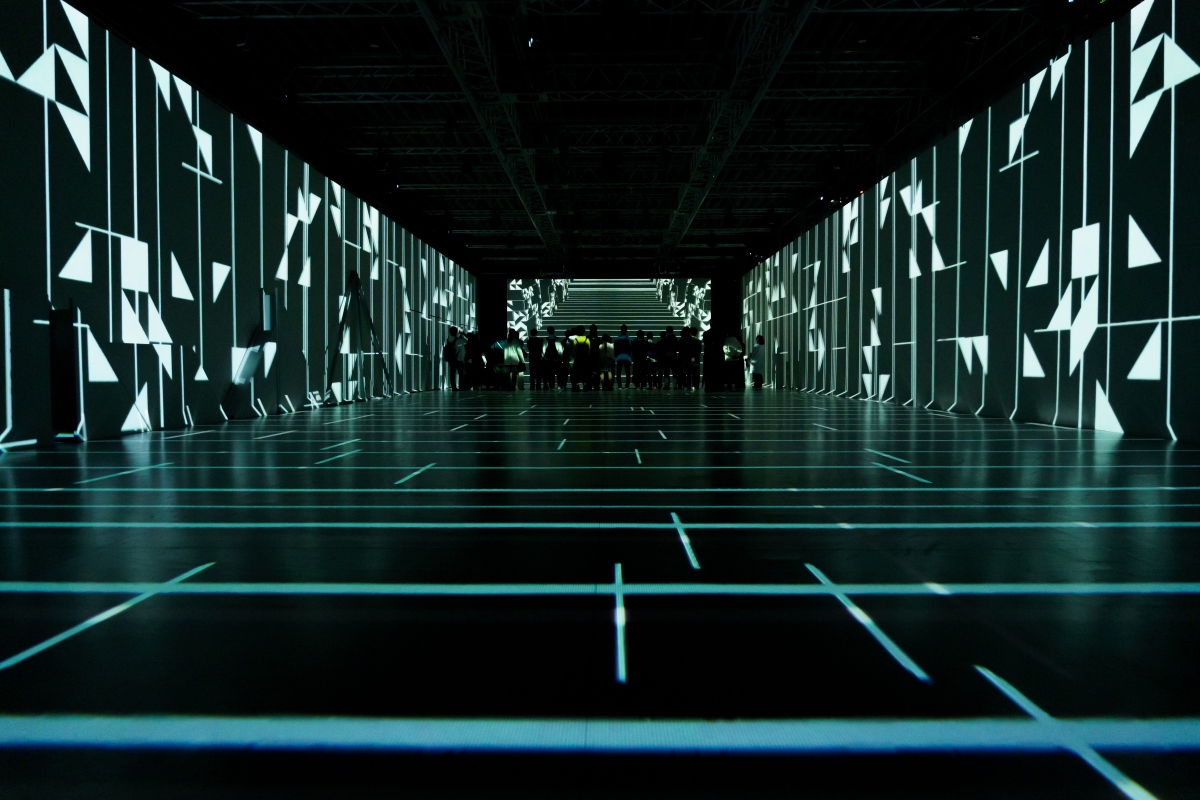

◉GALLERY B

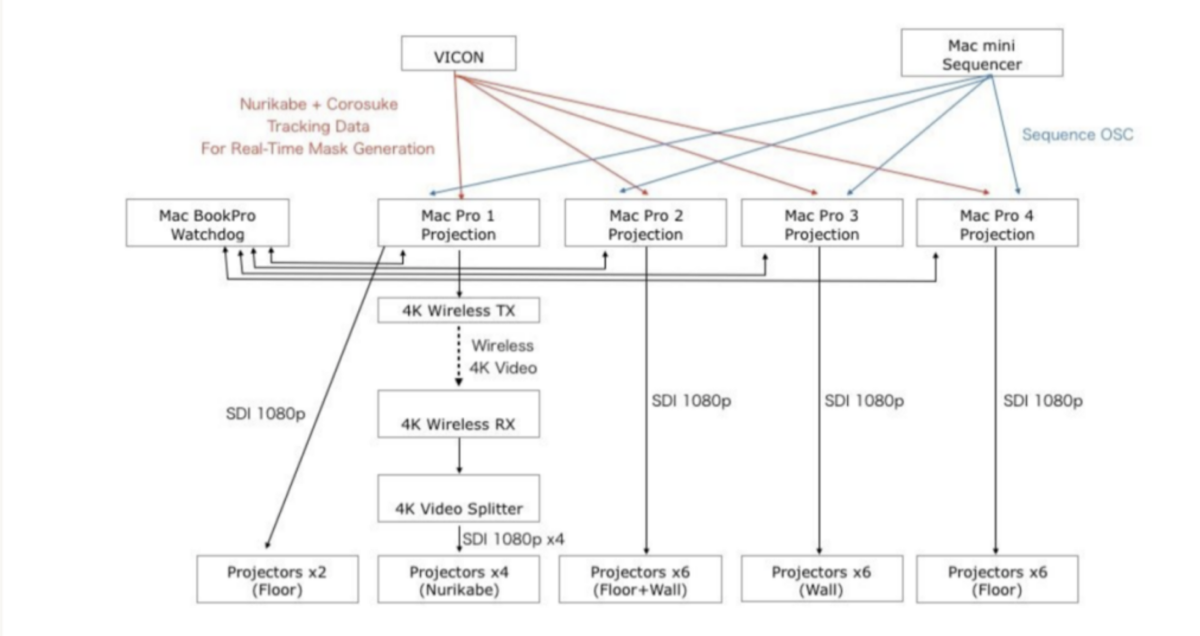

<Projection System>

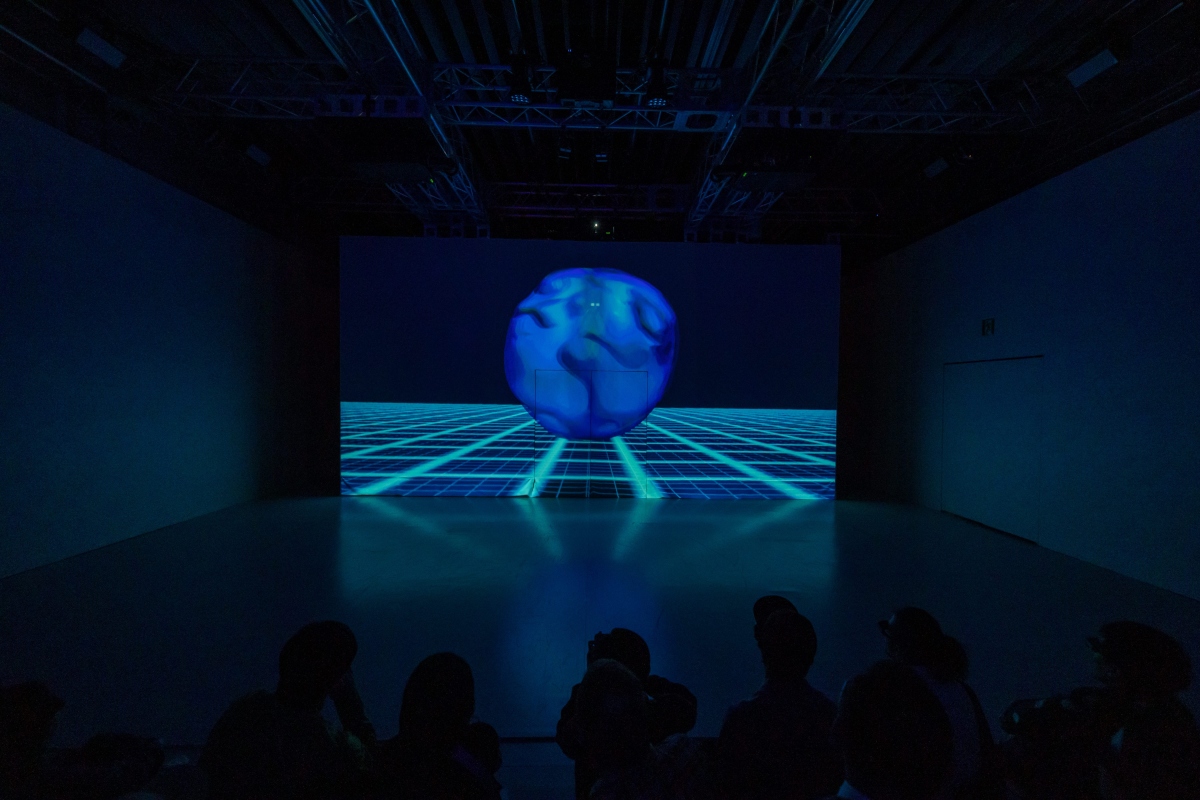

photo by Muryo Homma

In Gallery B, a total of 24 projectors displayed video imagery from four Mac Pros. Four projectors moved in tandem with the large mobile wall; nine projectors were responsible for the floor imagery; and eleven projectors were used to project imagery on the walls. The four mobile projectors and eleven wall projectors were synchronized with 3D active shutter glasses worn by patrons to display stereoscopic 3D imagery. The CG projections seen in the gallery were based on pre-rendered stereoscopic 3D 360° video. Accounting for the position of the large mobile wall and moving trolley system in real time, we applied further video distortion and object masking to the CG projections to achieve a highly immersive effect.

Control Panel

System Configuration

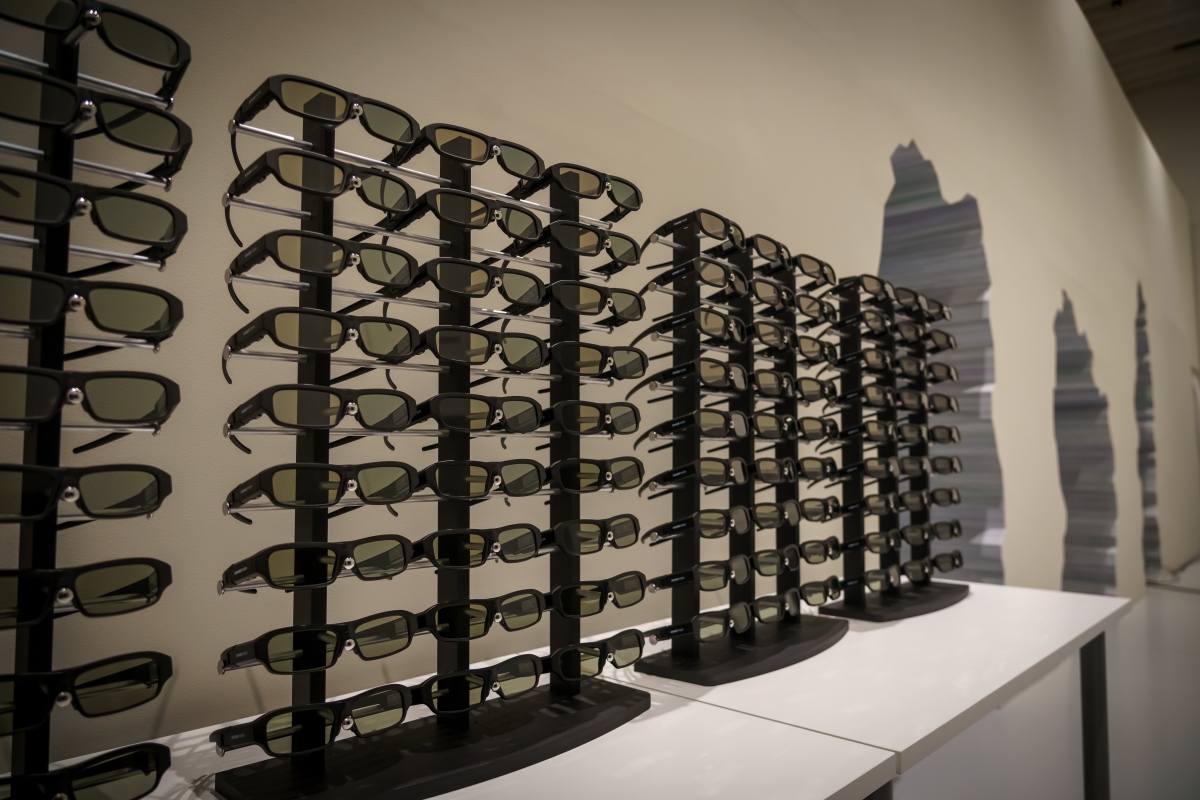

<3D active shutter glasses>

photo by Muryo Homma

All patrons were asked to don 3D active shutter glasses upon entering Gallery B.

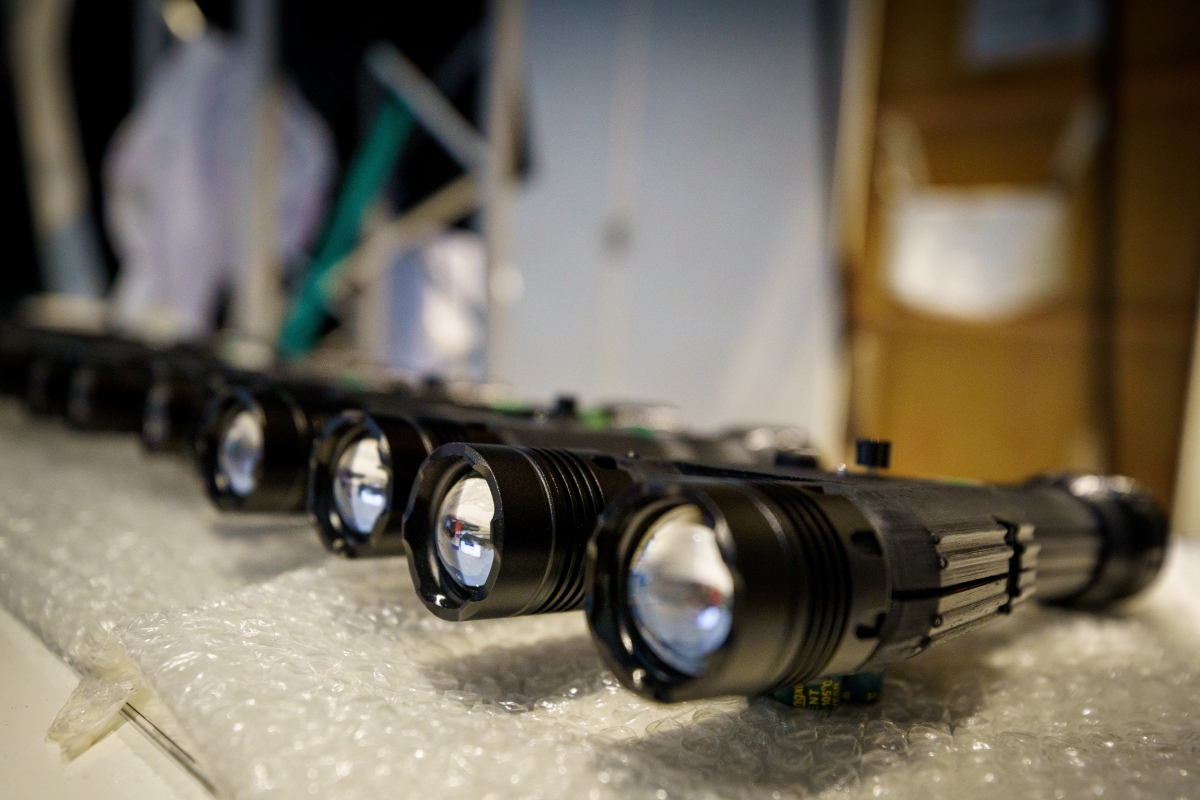

<Stereo Light>

photo by Muryo Homma

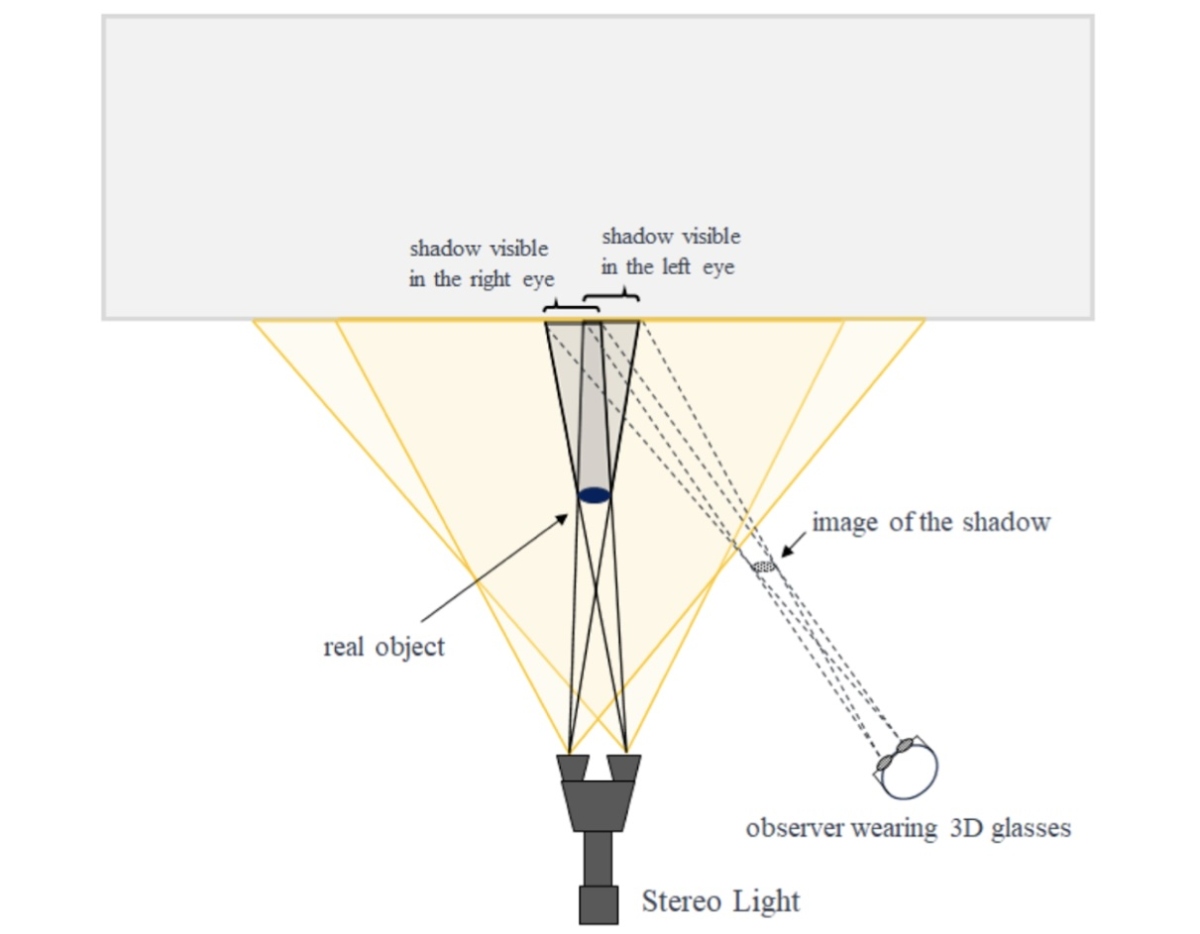

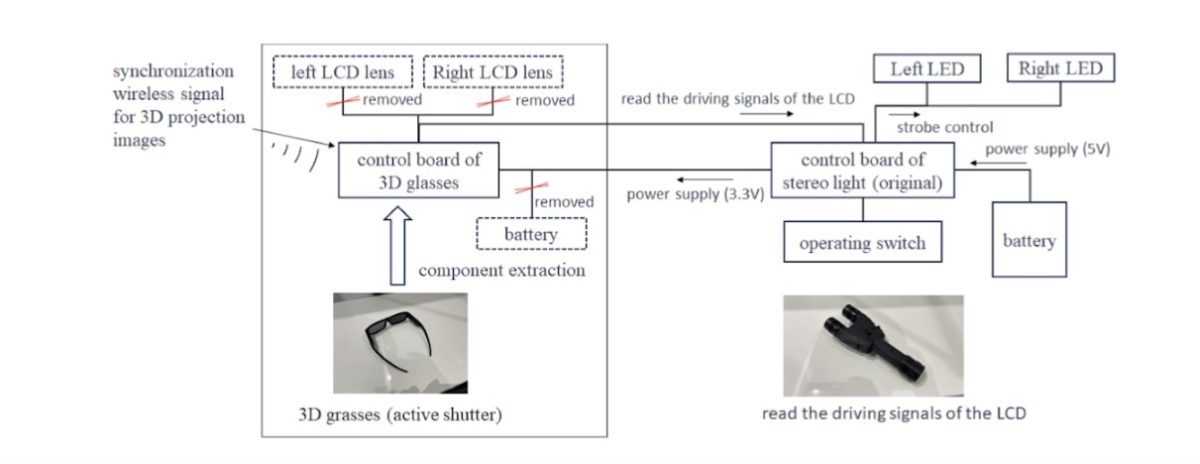

A device specially developed for this performance featured dual floodlights that flashed in sync with the 3D active shutter glasses. The resulting shadows appeared differently when viewed through the left and right lenses, creating a 3D effect. To make the system, we disassembled the 3D glasses worn by the audience members and extracted the control board. We then used a microcontroller mounted on a custom control board to read the shutter drive signal, thereby synchronizing the LED strobe to the opening/closing of the 3D glasses lenses with a high degree of precision. The lighting devices were put to versatile use. Their handheld size allowed them to be used by dancers and floor staff to illuminate the audience and performers. Moreover, the devices could also be mounted atop the large mobile walls and mounted on the winch system to be raised/lowered.

Basic Principles of the Imaging Technique

System Configuration

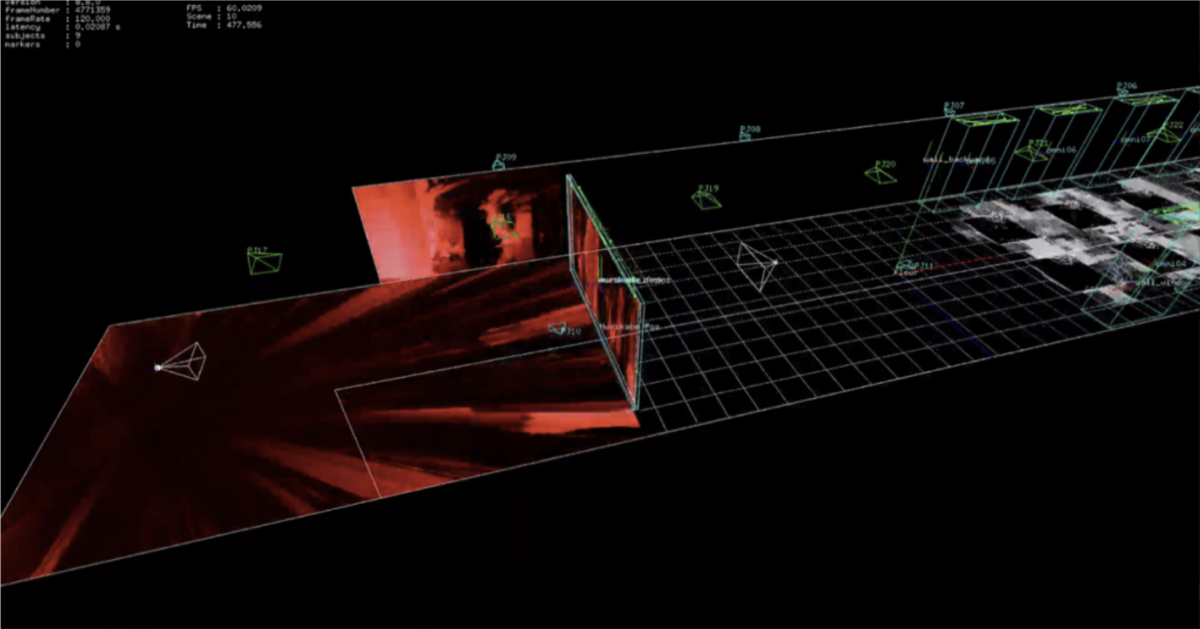

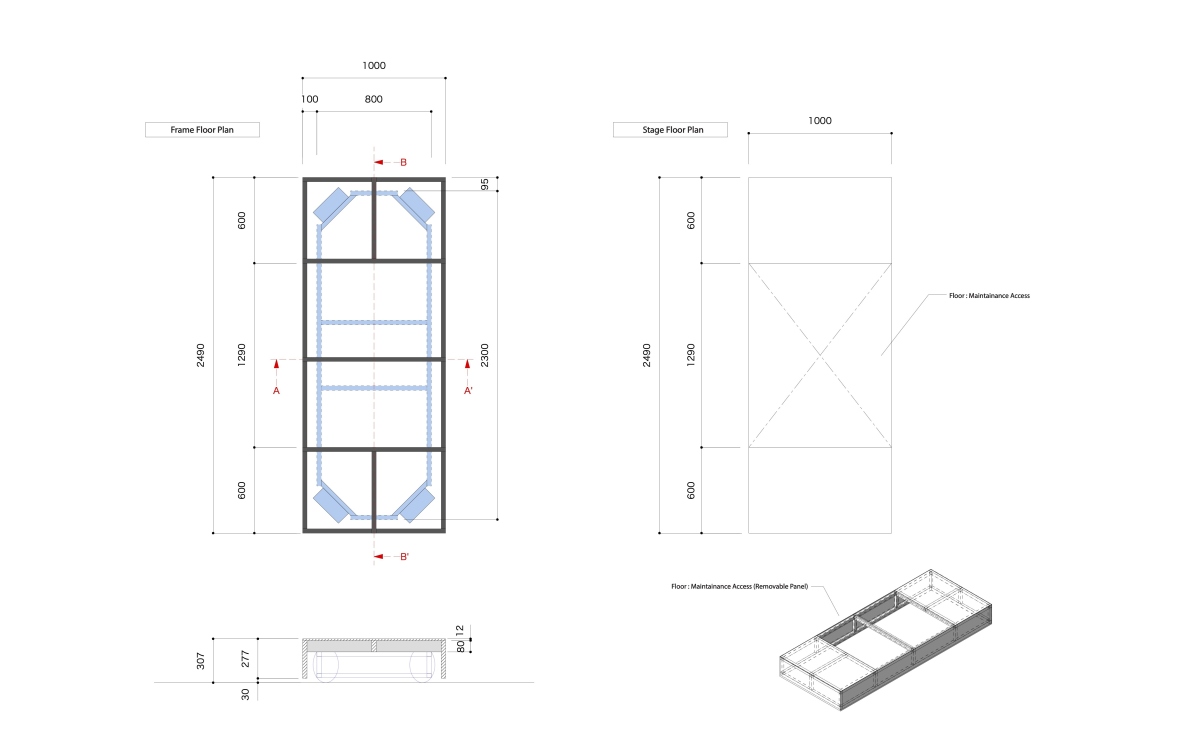

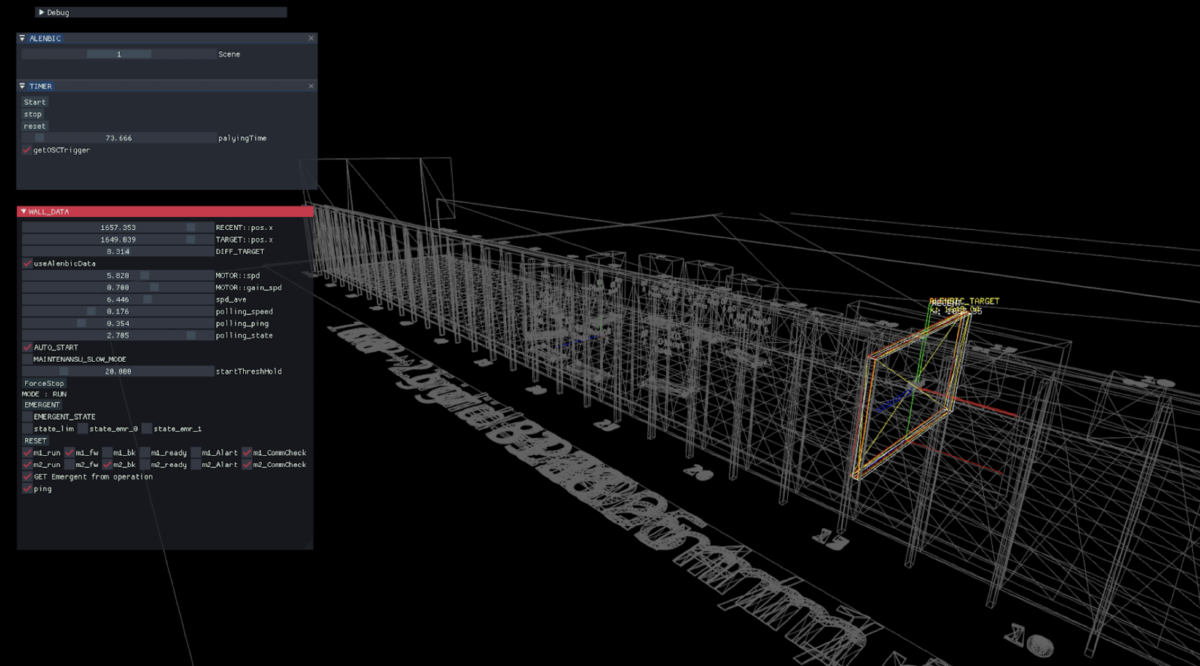

<Moving Trolley System>

photo by Muryo Homma

Eight large monolithic objects moved in formation throughout Gallery B, performing in coordination with the dancers, lighting, music, and video imagery. These monolithic objects, measuring 2 meters wide and 4 meters tall, were ferried through the gallery on a mobile trolley system developed by Rhizomatiks. In this case, we enlisted four motorized, omni wheel trolleys that were precision-controlled with the aid of motion capture technology. Using infrared cameras to accurately detect the position of the trolleys, we were able to recreate the routes preprogrammed in CG software with a high degree of felicity.

Control Panel

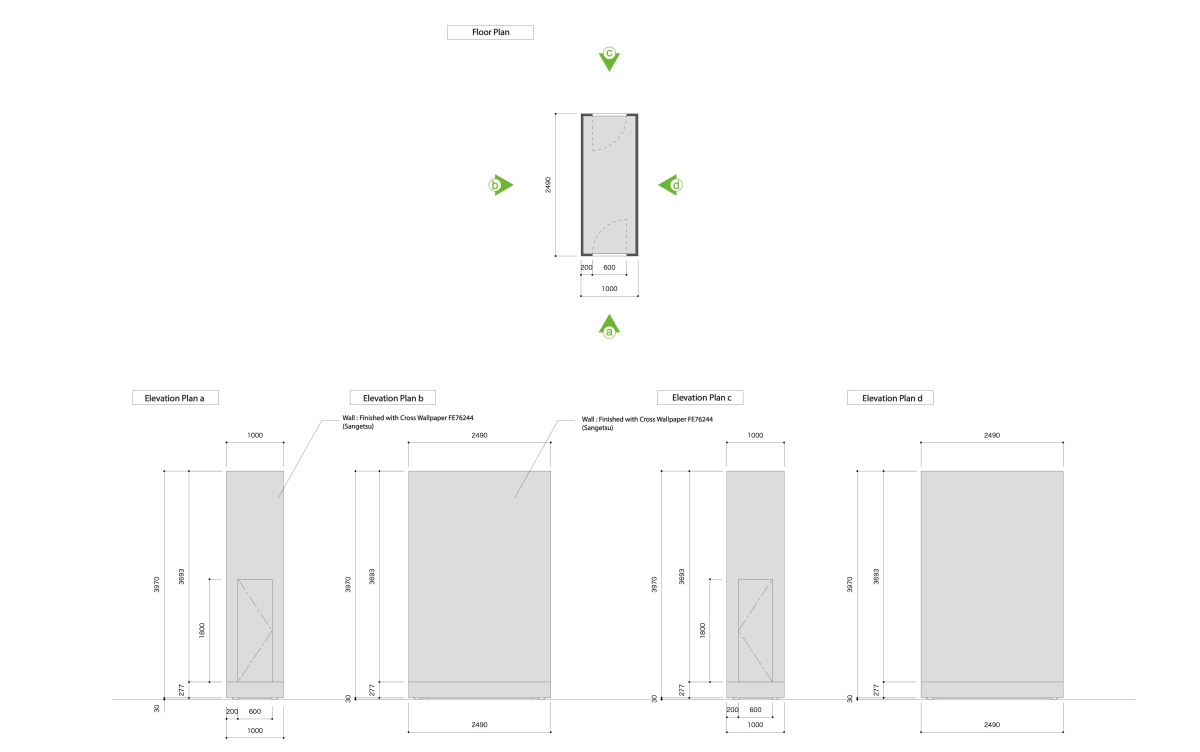

<Large Mobile Walls>

photos by Muryo Homma

The distinctive long, narrow space in Gallery B was divided into two by large walls which were made to move back and forth according to a predetermined sequence. The walls were outfitted with projectors and lighting devices that played a pivotal role throughout each scene. Firstly, the walls’ constant motion brought a heightened dynamism to the performance by altering the sense of volume in the space. Secondly, the system enabled the coexistence of two time continuums, one on either side of the walls. Furthermore, a central door allowed both performers and patrons to pass between the walls. At a certain point, this door even functioned akin to a mirror-like portal through which patrons could look through the timeline divide and see each others’ faces. In this way, the mobile wall opened possibilities for moments of reflexive, omniscient staging amidst the complex sequences.

The total weight of the 7.6 by 4 meter wall, plus 3D projectors and lighting equipment, worked out to around 600 kilograms. We installed industrial ceiling rails and rollers, and used two 400w inverter motors to move all this weight smoothly. The walls had a max possible speed of 500mm/s. In an actual performance setting, they reached speeds of around 300mm/s. Nylon tires attached to the motors supplied around 200N of force which provided enough friction to move the structures along the rails. Vicon and infrared markers were used to measure positional information down to the millimeter and leveraged to create sequences that traced the CG movement. The video imagery projected on the mobile walls, motor control network, and DMX lighting were all hardwired and connected to a control panel through a third rail for the cables and numerous pulleys backstage.

(Wall Control Panel)

(Motor Drive Unit Testing)

(Blueprint)

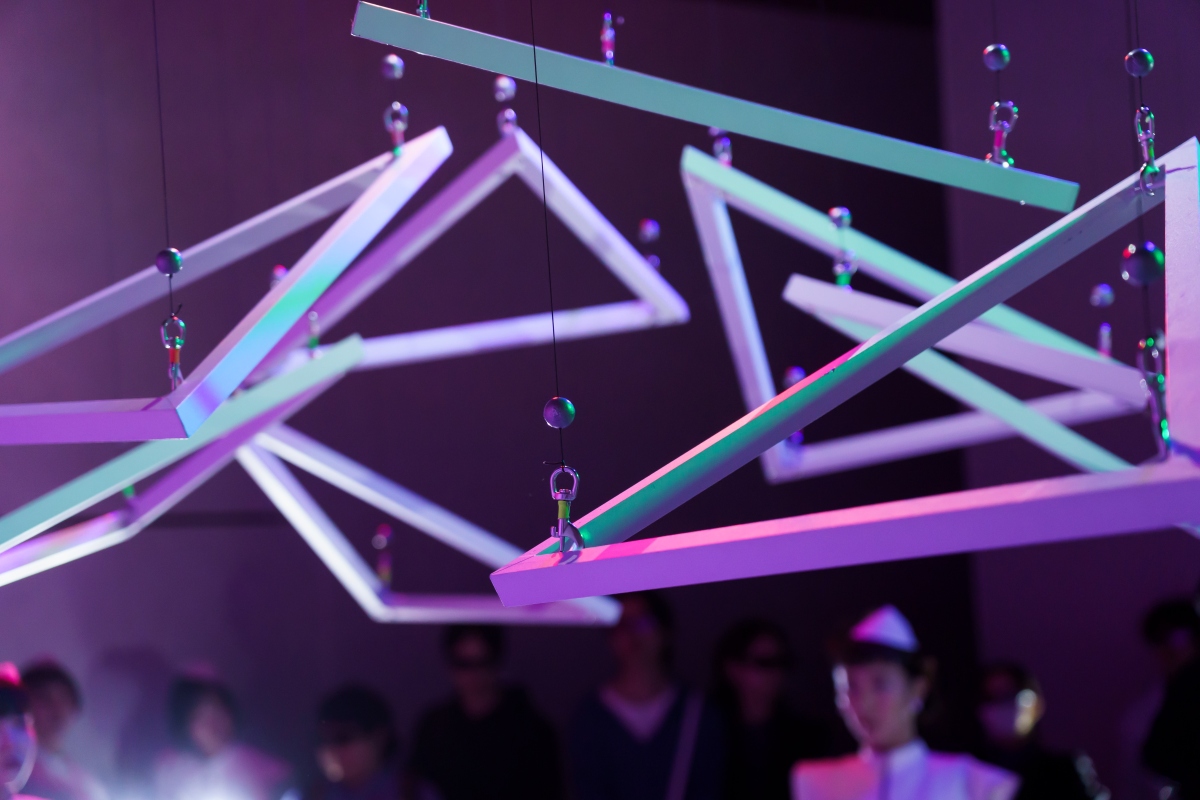

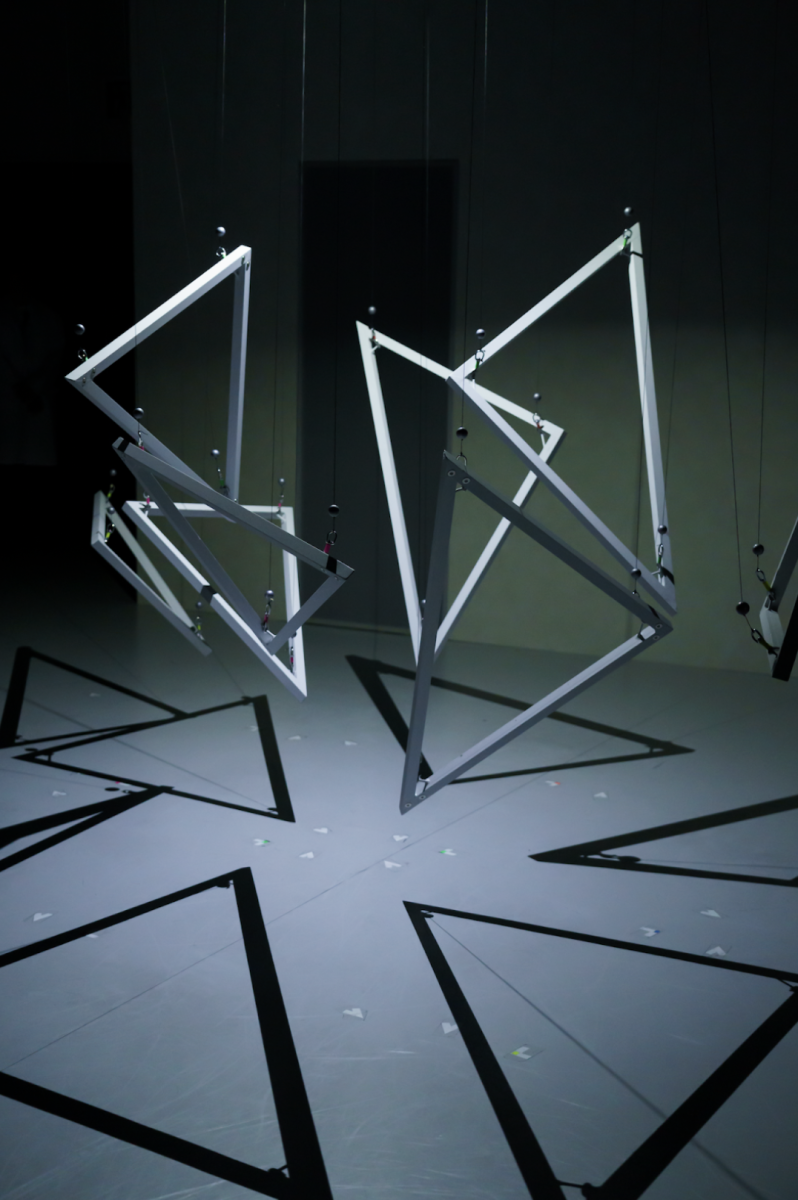

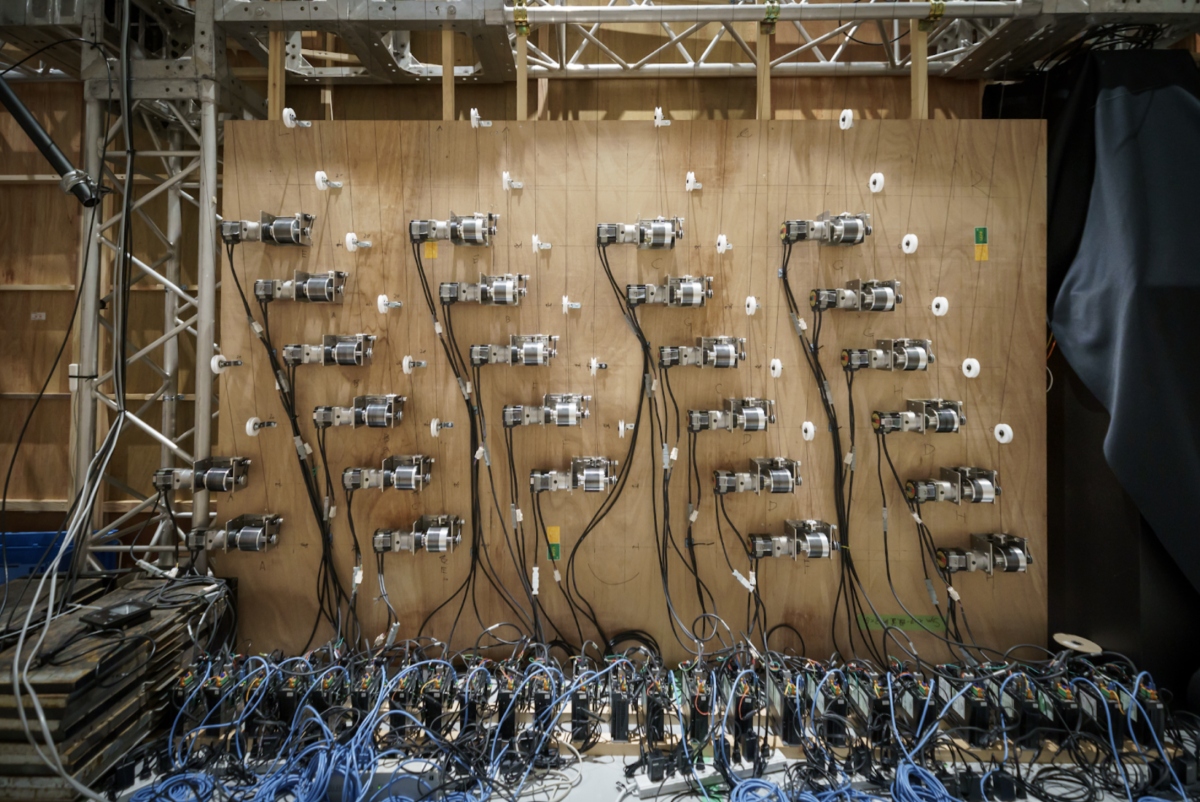

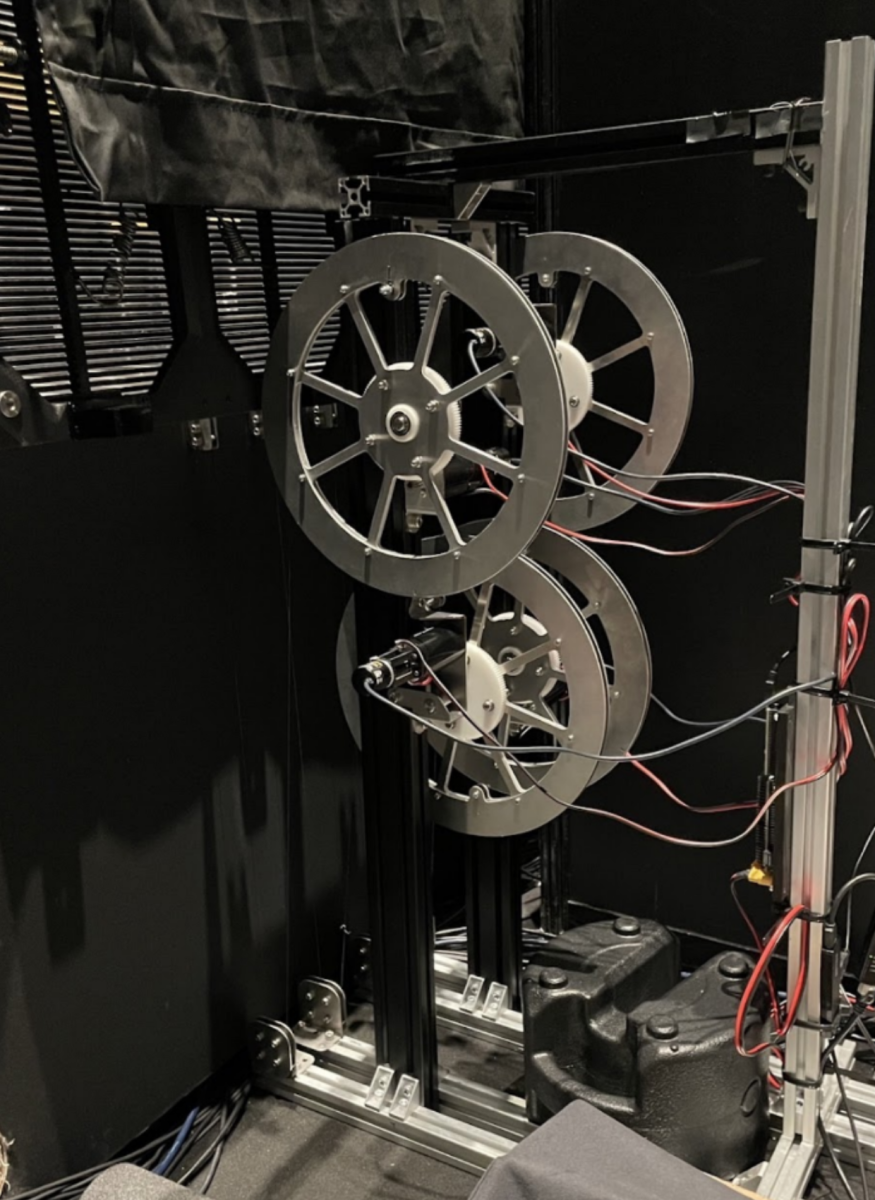

<Winch System>

(Pulleys, Carabiners)

photos by Muryo Homma

(3D shadow – floor projection)

photos by Muryo Homma

(Winch motors)

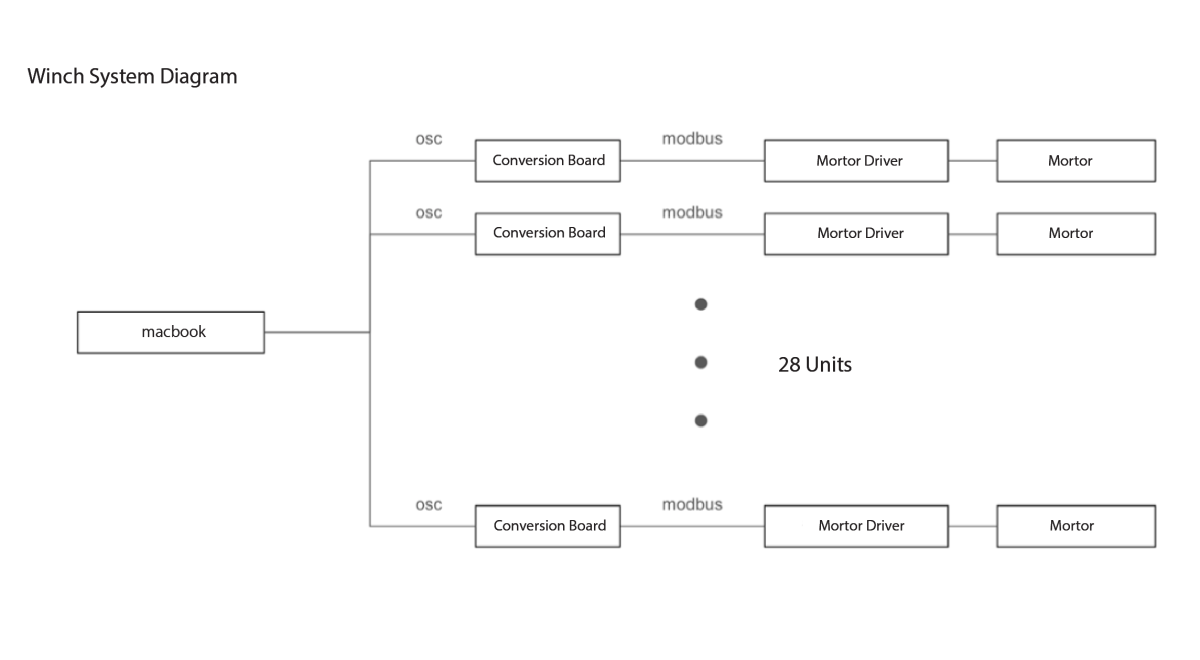

Midway through the Gallery B experience, dancers attached carabiners to eight triangular objects which were individually lofted from the ceiling with wires. Winches then adjusted the length of wire at the objects’ corners to control their height, roll, and pitch. Strobe lights affixed in the center of these triangular objects cast shadows on the floor and walls. When viewed through 3D glasses, these shadows appear three-dimensional. We specifically designed the grooves of the winch drums as a safety measure to prevent the risk of wire detachment, whether due to wear and tear over repeated performances or human error when hooking up the triangular objects. 28 backstage motors reached into the exhibition space via pulleys mounted in three locations. In order to control the overall movement, the length of each wire was determined based on 3D data. This information was sent to the control board nearest each motor via OSC, then converted to modbus to minimize latency.

(System configuration)

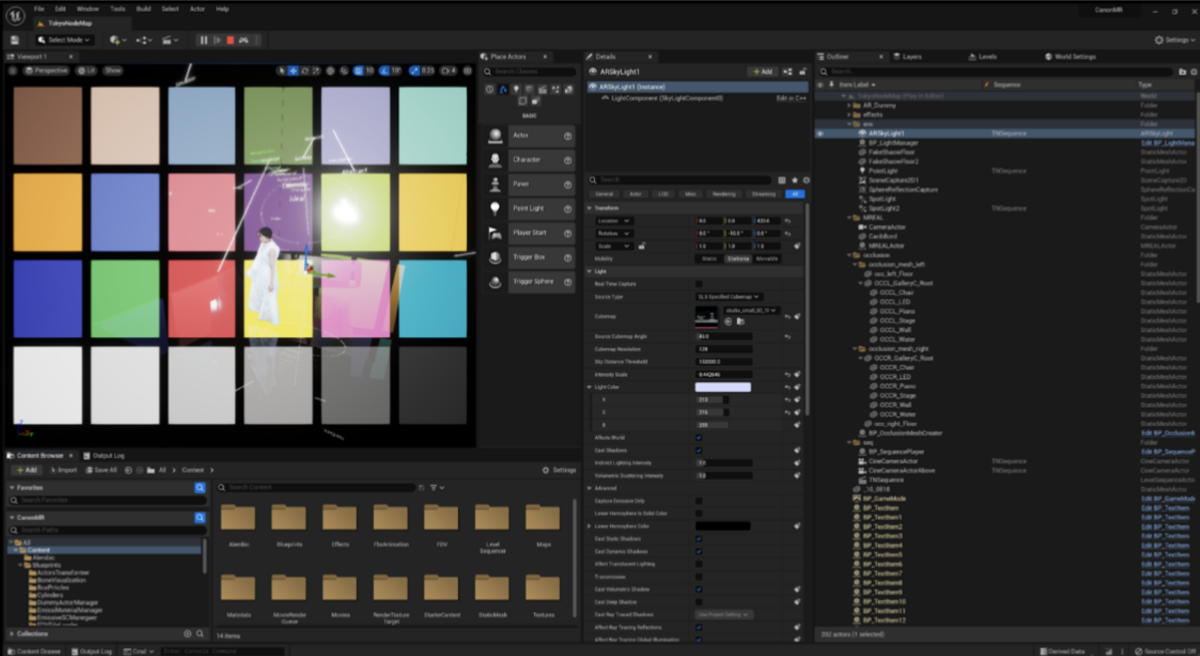

◉GALLERY C

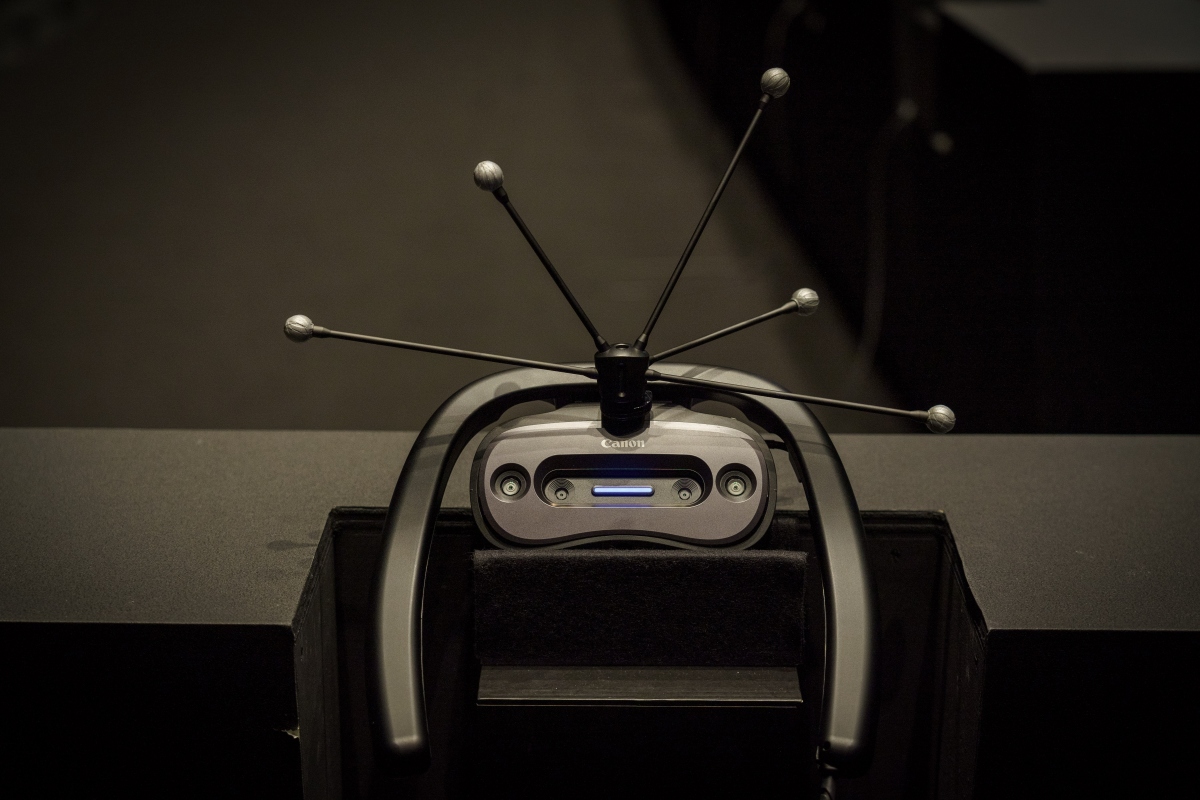

<MREAL>

photos by Muryo Homma

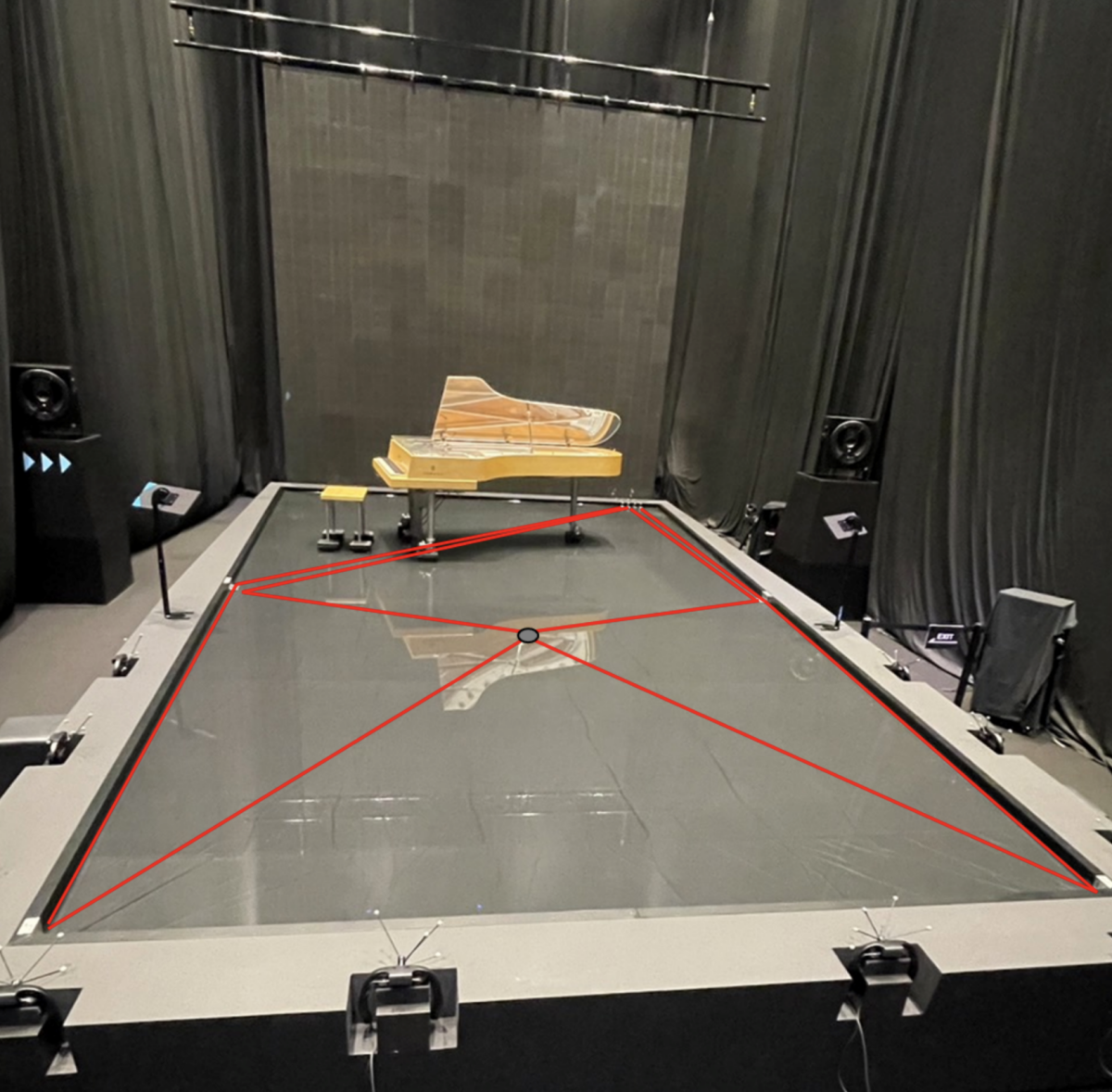

The virtual world seen through the MR devices was fully synchronized with the real LED display in the physical gallery space, self-playing piano music, and ripple patterns in the water.

We created a pseudo-reflective floor plate in CG to create the illusion that the AR graphics were reflected on the water surface, further heightening the CG’s realism.

For the benefit of patrons awaiting their turn with an MREAL headset, we displayed the silhouette of the AR dancer on the LED backdrop. When the patrons donned a headset, they would realize the shadow was part of an AR dance performance.

(Control panel)

(System configuration)

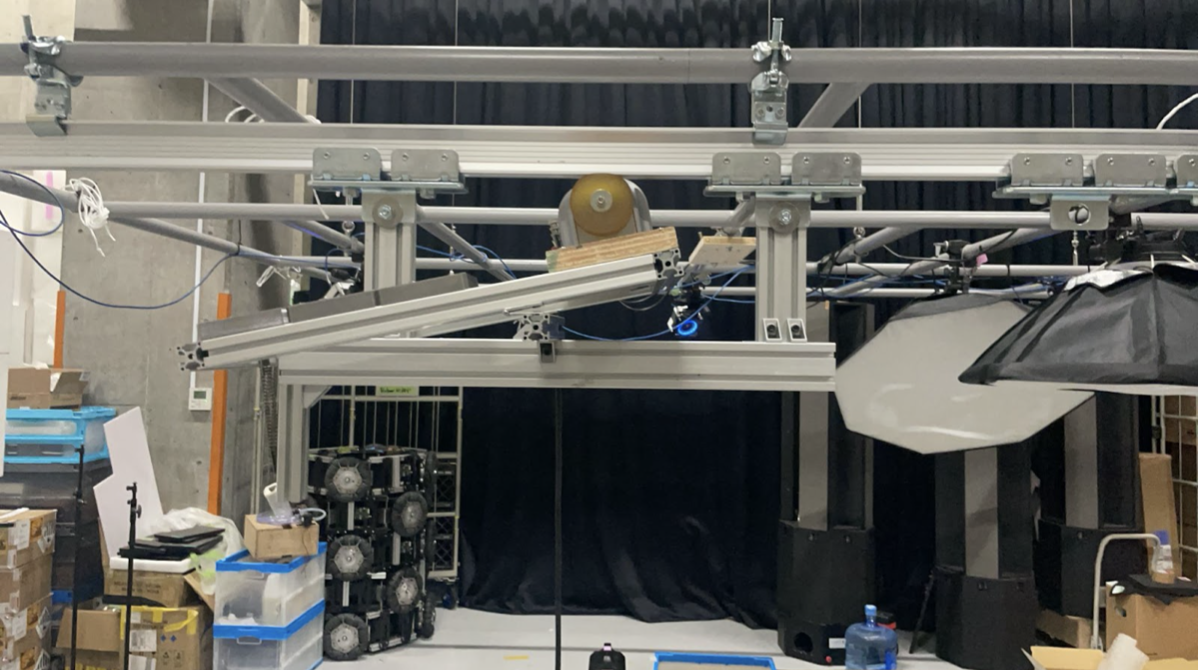

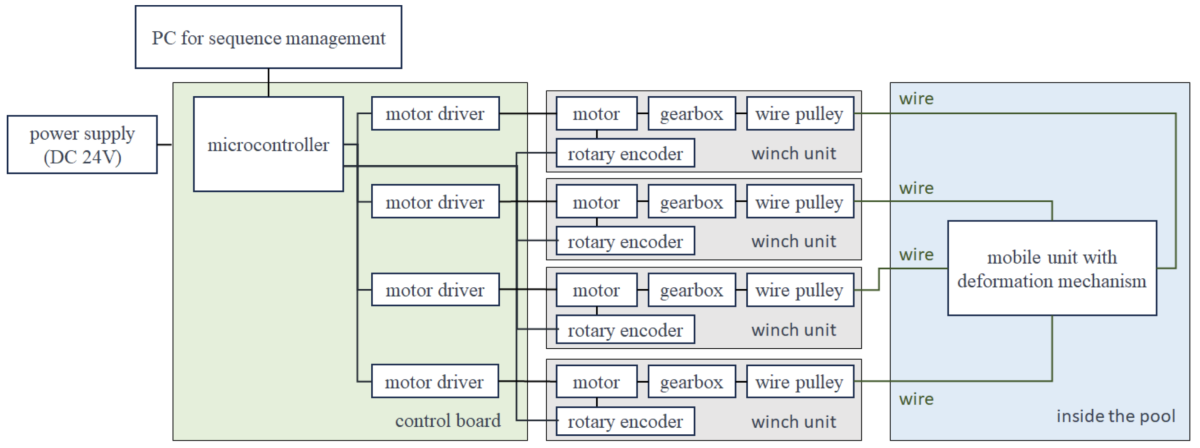

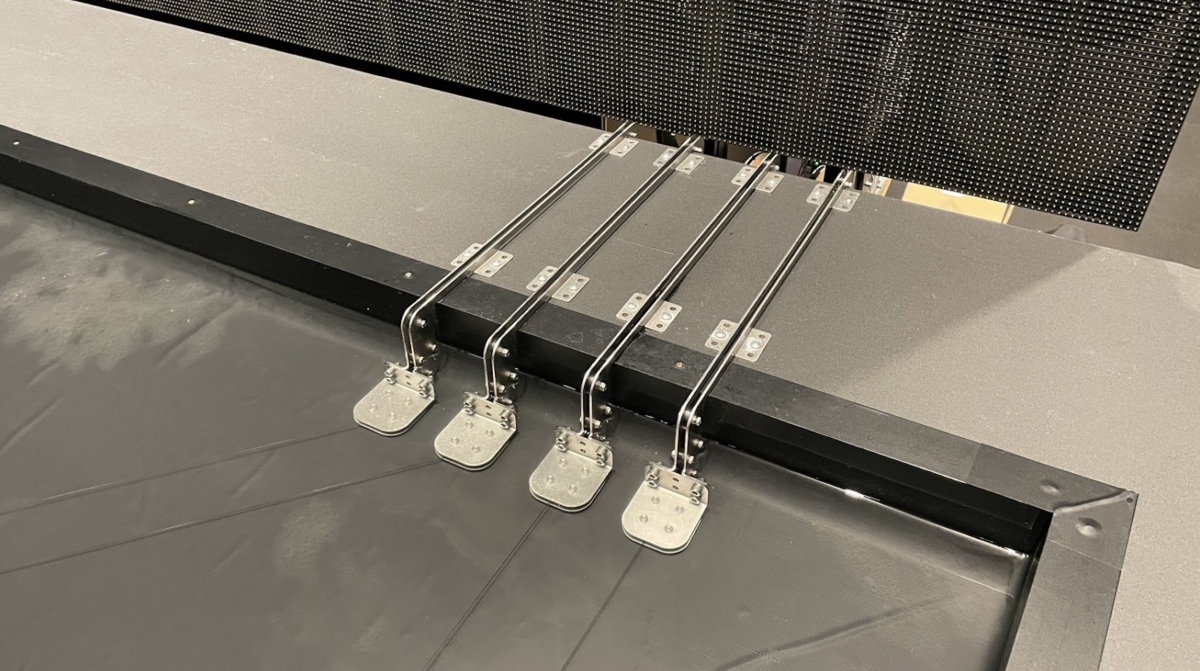

<Ripple control system>

photo by Muryo Homma

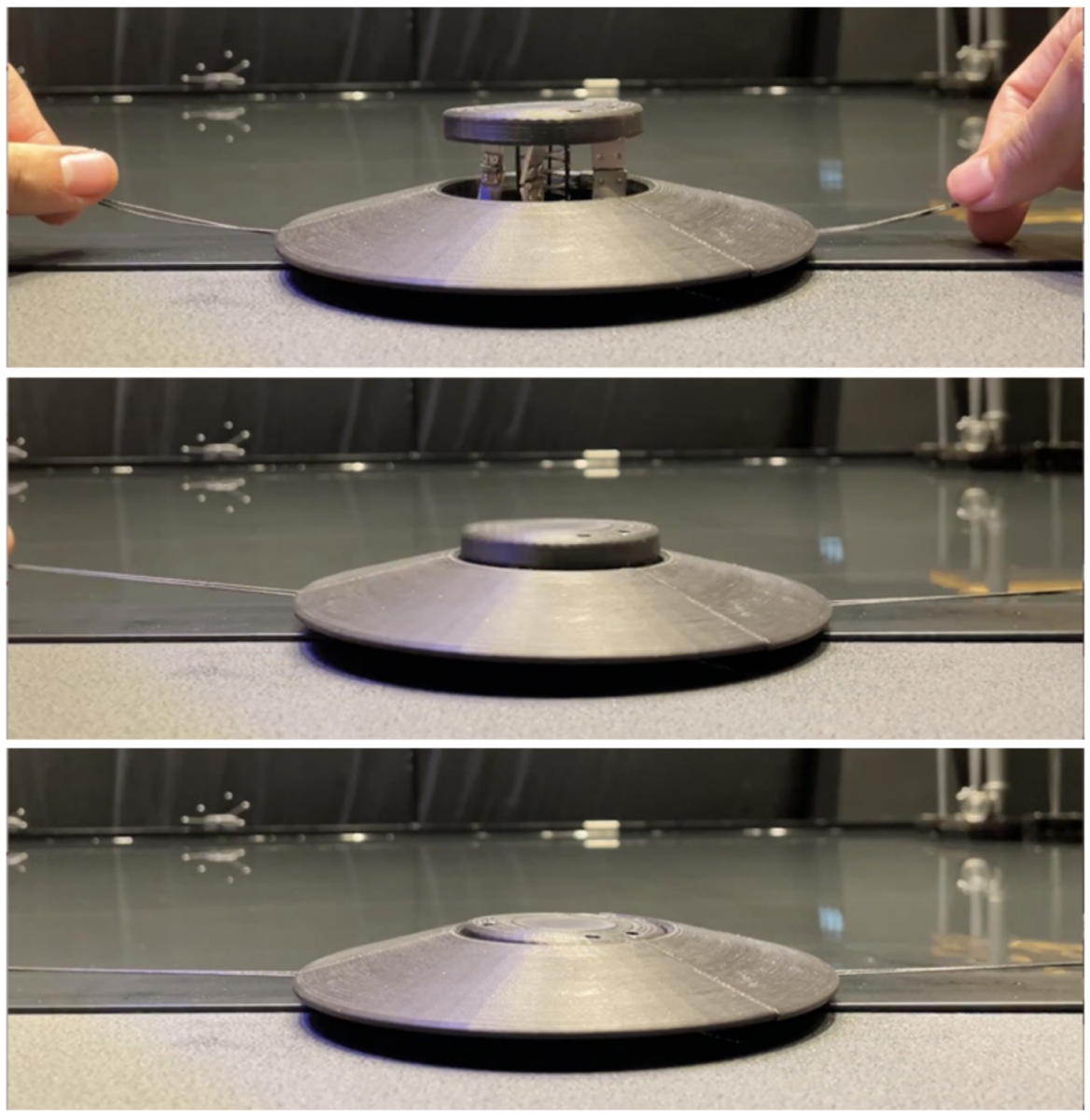

We developed a device capable of creating ripples in an onstage tank according to a stipulated pattern. The device uses four wires to pull a ripple-making element through the tank, allowing adjustment of the quality and shape of the waves. By controlling wire length, speed, and tension, we achieved a dynamic range of expression.

The movable element reached acceleration speeds of 1G at a max of 1.2m/sec. These mechanisms were installed only on the patron-facing side of the completed water tank. In order to generate waves in response to the virtual dancer’s feet, we also developed an algorithm that analyzed dancer volumetric data and dynamically generated trajectories for those ripples at each moment. As a result, we were able to create waves that organically responded in sublime sync with the movement of the dancer’s feet and brush of their dress against the water’s surface.

(System configuration)

(Ripple-generating device)

(Mechanism guiding the wires from the ripple generator into the pool.)

(The wave pattern is controlled by adjusting the wire tension.)

(Wire routing inside the pool)